Test Automation Basics - Levels, Pyramids & Quadrants

Posted By Duncs ~ 16th July 2012

This post started life in my series on hexagonal architecture, but it got too unwieldy & not directly related to the topic so here it is in all its own glory.

This post is now a brief introduction to my understanding of test automation, the test automation pyramid & testing quadrants and is used as a reference for the hexagonal architecture series.

This test automation & the associated test levels. This section will cover the test levels & bit on Brian Maricks Testing Quadrants (which I’ve found really useful for testing in an agile environment)

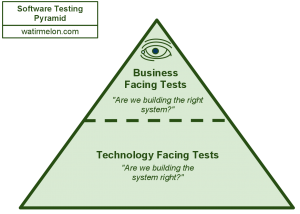

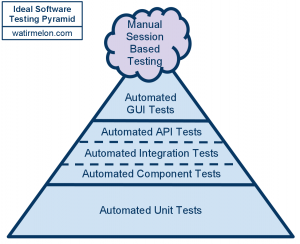

I subscribe to the idea of the automation pyramid (taken from James Crisps post on test automation). The image above shows the test levels in a pyramid shape & demonstrates the relationships between amount of tests at each test level in a test suite.

A very thorough analysis on the test automation pyramid can also be found in The Tar Pit.

The main idea is that you want more unit tests than any other level of test because they:

- test the behaviour of the class / component rather than its implementation

- have no dependencies on other classes / components

- are easy to write & maintain (due to their independence)

- are quick to run

This makes them the most cost efficient way of testing of individual or closely related classes, but obviously they do not provide enough coverage to satisfy the development team of the confidence of the code.

So to increase coverage, we move up to integration tests which:

- test the integration between components / features / external 3rd party services

- are dependant on the other components / features / external 3rd party services being available & stable enough to execute tests against.

- ensure dependencies of the system being developed continue to work as expected

- are slower than unit tests to run, but faster than acceptance tests to run

Up until this point, the tests are largely technology focused (taken from Brian Maricks Testing Quadrants which I’ve found really useful for testing in an agile environment - more on this later. Lisa Crispin covers it well in her book & in this test planning presentation) - that is they serve the development team in proving the business logic & providing confidence that that logic has not been compromised.

The top of the triangle sees the acceptance (GUI) tests, of which there are the fewest number of tests in test suite. Acceptance tests are:

- a tool for conversing with business stakeholders (as they are generally written in human-readable language)

- a means of knowing when we’re done (developing a component / feature)

- checking the UI by actually clicking buttons on that UI

- High dependency on other components / features

- Brittle - easily broken if the UI changes

- Slowest of the 3 automated test levels to run

So if acceptance tests are slow & brittle, why do we go to the effort of writing them? Because they are the main tool for driving conversation between the development team & business stakeholders. The tests are generally examples of the requirements as specified by the business stakeholders so they get a feel for stable the code is.

This is the automation pyramid. It has been added to by Alister Scott who introduces the concept of manual testing:

He also shows the intention of the tests in each level in this post responding to James Crisps original automation pyramid post:

With the “all seeing eye” at the top of the pyramid encompassing acceptance (GUI) & manual tests.

With the “all seeing eye” at the top of the pyramid encompassing acceptance (GUI) & manual tests.

Take a close look at the small print: “Are we building the right system” & “Are we building the system right” - This is a fundamental principle in “Agile Testing” where we (as Testers) shouldn’t just be focussing on finding bugs at the end of the development cycle. Instead we should be getting involved with the development as early as possible for example by helping to drive out requirements as & when they’re being worked on, not 6 weeks after a document has been put together (the end of yet another lifecycle). I go into more detail in my post responding to the Test is Dead movement of late 2011.

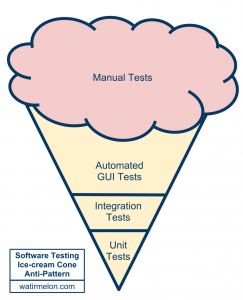

Anyway back to the test pyramid… Alister goes on to talk about a common problem in organisatons who use automation - the inverted pyramid, or ice-cream cone:

this situation occurs where too much emphasis is placed on automated GUI & manual tests which may occur for numerous reasons such as lack of experience in writing lower level tests or working with leagcy code.

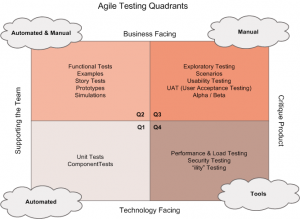

As I mention previously, a tool I’ve found very useful in determining which tests to run & what value they add is Brian Maricks Testing Quadrants:

You can see examples of the Business Facing tests on the top half of the quadrant (the top half of the pyramid) & the Technology Facing tests on the bottom half of the quadrant (the bottom half of the triangle).

Click on the image to get more detail from Lisa on how to use the quadrant.

Marekj (@rubytester) has also provide a great slide deck explaining the quadrants

Its another way of visualising the test levels & I find it compliments the pyramid quite nicely. Certainly I’ll be using both the Pyramid & the Quadrant in my hexagonal architecture post.

UPDATE: Elizabeth Hendrickson has revisted the tested quadrants in her CAST 2012 keynote “The Thinking Tester, Evolved” & Markus Gartner has done a great write up on the Elizabeth’s new interpretation on his blog. I’ll need to revisit the quadrants myself when I get time to digest the video & Markus’ post.

UPDATE 2: Gojko Adzic is also re-thinking the testing quadrants model. Some good conversation on this post.

Some useful links:

Test Pyramid (Cohn - credited as being the originator of the pyramid in “Succeeding With Agile“)

Test Pyramid (Fowler)

Pingback: Hexagonal Architecture For Testers: Part 2 | Duncan Nisbet()